The exploitative cost of GPTs

RLHF has been fueling the rise of GenAI, but at what cost?

The exploitative cost of GPTs

As OpenAI’s headquarters in San Francisco celebrates ChatGPT’s second anniversary, the sun sets on Sama offices in Nairobi, Kenya.

Once a key partner for manual data labeling process that powered the GPT-3.5 model’s public launch, there is no party here. Contractors at Sama work long hours manually labeling and identifying characteristics of multimodal data, paid up to 259 KES (roughly $2 USD) per hour tracked.

At one point in time, contracts were established for Sama to label harmful content for OpenAI’s fine-tuning of model safety parameters. Workers were exposed to disturbing media and required to analyze and label the content — from descriptions of child sexual abuse to images and videos of human execution and gore. An exposé detailed the poor working conditions surrounding such a task, and Sama has quickly backed away from sensitive content contracts in a PR preservation effort.

The contractors in this story will never share the rewards of the AI bubble, nor will they receive justified wages to that exposure. Instead, they are either let go from their contract position or reassigned to new labeling workstreams, where the exploitative work continues for a different dataset. All the while, OpenAI stakeholders and employees reap the crops.

This is not a new story

While I work on my “investigative journalism punchiness,” I’ll point you over to the more immersive read: TIME released that said exposé in 2023, describing the lack of compensation and mental health support to the contractors involved in sensitive content labeling, powering a process known as reinforced labeling from human feedback (RLHF).

60 Minutes recently put faces to the name, a few associates involved in suing Sama and OpenAI for the emotional damages they wore. To see those who dreamed for something better but faced a much worse reality, is heartbreaking.

Addressing the elephant in the room

While artificial intelligence has long held promises of enabling advances in research, technology, and society, we are well-aware of cracks in the foundation. The most underlying concern is the disadvantages AI might create in the world, by enabling those who can wield its potential through use cases, and widening the gap from those whose labor built its foundation. This quickly becomes an ethics class discussion once we also account for the widening wealth gap, dwindling of natural resources, and the overall deregulation of the technology industry.

We saw a similar experience with AI art, ie. image diffusion and how those models scraped images, photos, and drawings across the web. For many of those artists, unless you’re backed by a corporate monolith with limitless resources, legal discourse isn’t really an option.

I don’t necessarily think this Sama incident is a direct result of OpenAI blindly saying “Hey let’s find a country that is desperate for work and give them a meager wage to click through all of this violent, terrorizing and horrific data.” I think it’s an indirect result of neglect through the chain: a client wants work completed at the fastest & cheapest rate, a broker takes cuts from a reasonable wage, and middle management forces a deadline on low-waged workers.

Human-centered vs. AI-centered

Sama is a great example of “what could be” for our microcosm of the technology workforce within the United States. It becomes egregiously difficult to continue leading and supporting work in this field especially with the threat of building the very thing that might throw us aside. What we hope for is for things to change, while also being looked at as leaders in the space to say “hey, this doesn’t seem OK.” All this to say: we should be wary of these kinds of past failures and continue to evolve our approaches to building AI use cases that fit human-centered problems.

I haven’t heard anyone make the argument that this type of sacrifice is required for progress. My main assumption as to why that is, is because everyone is human and we can empathize with the trauma that Sama workers went through for this. But there are some who truly believe that AI is the next “evolution” of what a human is, and that over time we will be outpaced by robotic capabilities.

This might bite me in the ass in about 30 years, but I don’t build AI use cases to satisfy sentient AI needs, nor do I intend to in the future.

If you’re really into reading, I recommend a book from my friend Don Norman - Design For A Better World. In my eyes a situation like this is completely unavoidable if a team truly considers a systems point of view and understands with the people involved about constraints, dependencies, and consequences (versus the method of letting the project manager decide what the cheapest option is). This book goes into quite some detail about humanity-centered design approaches, and a necessary followup read to “that one design book with the red teapot on it.”

What I see coming next

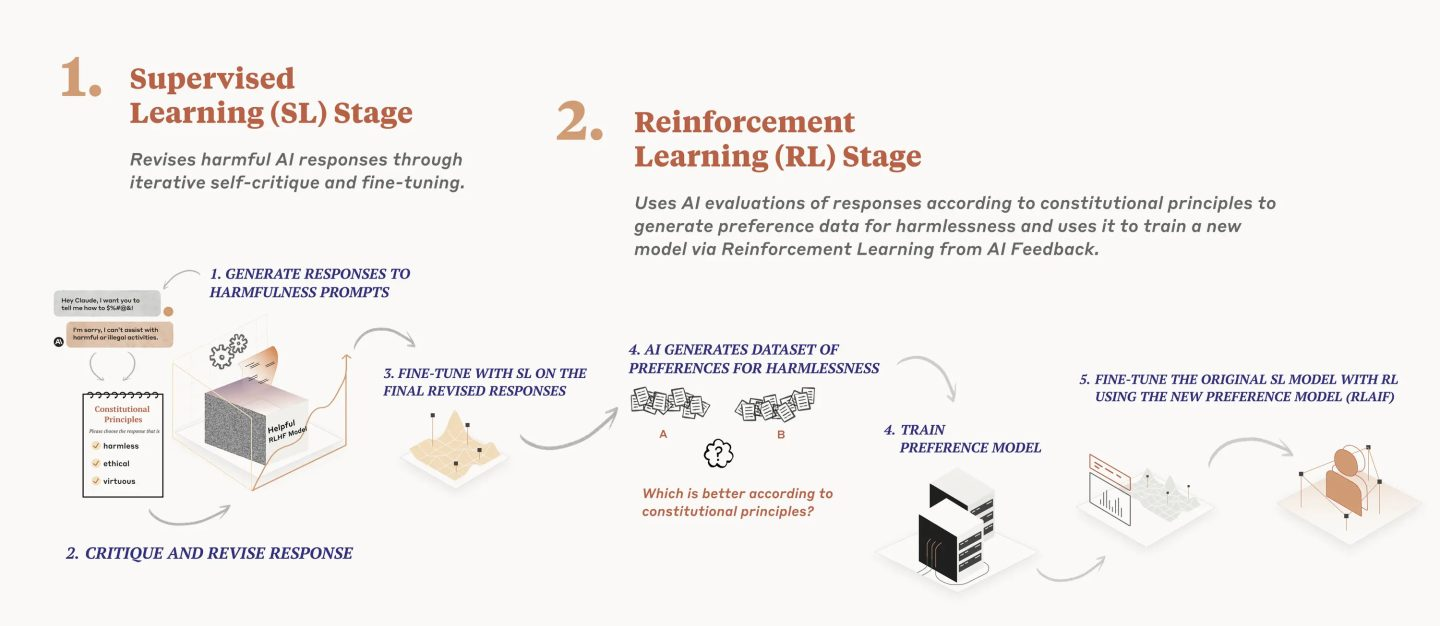

Earlier I mentioned RLHF, the method of humans providing feedback to reinforce data, with labeling being a subcomponent of the full process. What you may hear as an emerging trend is RLAIF (replace your “H” with AI, what do ya get?), and soon you could imagine that the foundational models we have begun to create with advanced reasoning and chain-of-thought capabilities (eg. o1) that we would be able to deploy models to effectively “train themselves.” Whether that crosses a bridge of intelligence or sentience is still to be determined.

I am a big fan of Anthropic’s parallel approach to this challenge using what they call Constitutional AI - they employ AI assistants comprising of “constitutional instructions” to determine what appropriate responses the LLM should provide. This has the added benefits of efficiency vs. tens of thousands of humans, as well as transparency to humans as to the reasoning and inference made. •