Betting Big on Generative UI

AI hasn't meaningfully injected into interaction design...yet.

A Google search for generative UI will take you to a set of articles with loosely defined definitions of how it is a concept of generating dynamic user interfaces in real time, much like a conversational AI generates an answer to your query. As technology capabilities evolve across both hardware and software, there may be a home soon for experiences leveraging generative UI in unique use cases, but certainly does not come without the discussion of what it could mean for designers.

UI as a computational model

Unlike a traditional AI agent, Large Action Models (LAM) use neuro-symbolic programming to determine the structure of a computer application, understand the relationship between human and their intentions on the interface, and enable automation of specific tasks through the same inputs a user would use.

This technology aims to fill the holes where LLM and computer vision by themselves don't fulfill. With the use case applicable for any type of digital product, it entirely disrupts the need for middleware (such as an API or webhook) and opens access of complex use cases to a wider audience.

My belief is that data extracted from using a LAM could contribute to Large Interaction Models (LIM) — models that are trained off of recorded user input and output to justify a functional and usable layout of an interface. Combined with a diverse component library and rendering capabilities, a UI data model may never have to be "hard-coded" again — they could be procedurally generated based on the application state and the user's intent from a previous action.

Not talking about design rendering tools

This is not to be confused with generative design tools like Galileo. The team there is clear on positioning their tool to design ideation. It doesn't really scale with larger scale product innovation.

What we are talking about is a little more related to the work Vercel has explored from use cases that "render" UI via desired queries — for example, if I ask a conversational AI bot for the weather, it should render that information in a widget that I would more commonly see when I look for weather.

This can apply for a lot of use cases: data visualization, content formatting, and media layouts; so many use cases are in fact possible that a given product team has to decide what investments to make for delivery. A theoretical approach to generative UI could cut the delivery time of functionality significantly, and tap the entire product team (including designer) into next and later initiatives that may not otherwise have been tapped into.

Exploring example cases

Use cases for generative UI are kind of contradictory to communicate, especially given the principle that generative UI can be unique to the same use case. To properly visually describe generative UI at work, please take a look at some of the videos I've created to speculate scenarios that I've discussed with others in the field.

Intelligence in real-world scenarios

I think a case that we often think when we think about generating from the blue is about the intelligence of a system within a chaotic and noisy environment. And especially considering the interoperability between systems in an environment, generative actions are not only viable, they are desirable. Take for example, a collaboration software that uses commentary coming from the call to suggest creation of assets for their upcoming workshop.

This isn't without considering that actions and integrations need to be available for a user, hence having an architecture that supports these types of generated queries is a responsible driver. And a bigger point from this example, is that actions from an operative standpoint require consent — if this action isn't what the user wants to take in the exact moment, they can always continue along.

Accessibility (and by effect, personalization)

A big pool of UX effort goes to creating one shared experience that tailors to all users needs, including those with accessibility needs. Intaking parameters for a user's needs on any public device, could ostensibly create a view that caters to that user's need, by determining the user case. We have technology today that communicates with one another — why can't we use it to standardize desired experiences for all?

There is a pitfall here, product teams in these involved domains should avoid focusing only on personalizing interfaces for high-value customers, and consider customers who need access (and are potentially high-value as well).

Predictability

With the millions of example interactions that establish good practice through heuristics, along with the millions of bad examples, generating an interface becomes a matter of pragmatism rather than practice. An area I believe generative UI thrives in most as a concept is within augmented reality (AR) experiences — there are unforseen scenarios where presenting information based on data that has, or has never, been represented as information before.

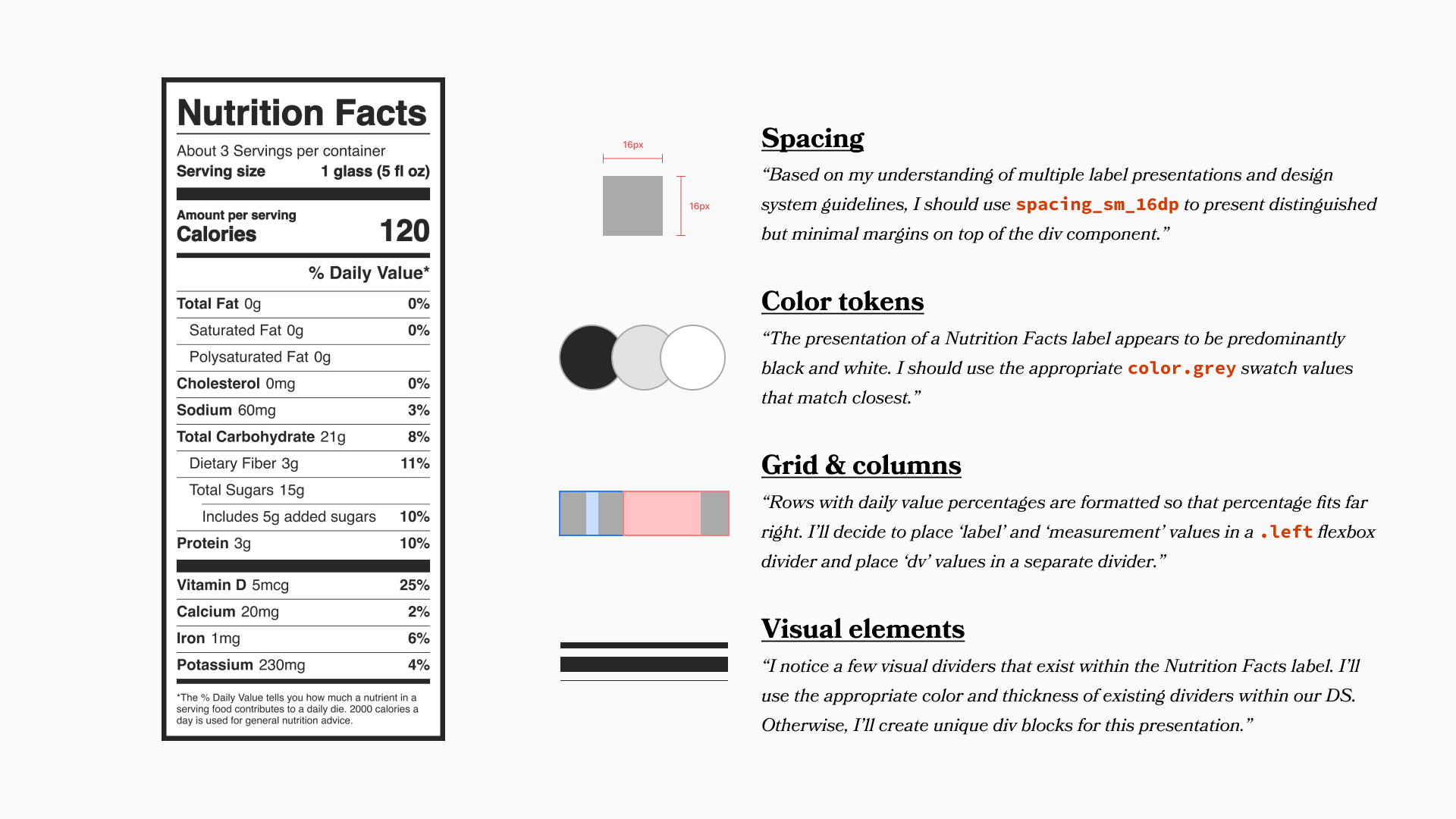

I positioned the concept of generative UI in AR with one of my colleagues — would you rather read through a text block to gather the calorie count of a wine glass, or glance at the calories section you see on every nutritional label? Can you validate that with a user group? They like it? Great! Now do the same for countless cases of cognitive information retrieval.

Interface models will never be a black box

A book will always have a cover, spine, and pages that serve as the foundation. The content on and within the book is the only variable change. So, if interfaces are generative, they will render directly from a model akin to a foundational design system.

Design systems contain the atoms required for an interface. By understanding the relationship between different atoms as they compose a molecule, it is indeed possible to duplicate it in an identical scenario. Like all intelligent models, a model equipped to generate UI needs to be trained and fine-tuned for what constitutes an appropriate render of the UI.

We often joke that UI design is circles and squares. That most certainly can be a valid interpretation by a machine

Accelerating the death of the UI designer

Technology has rapidly evolved over the past 30 years, let alone the past four years. Designers continue to face pressure from business partners and product teams on advancing their expectations beyond simply designing interfaces — we are asked to research, code, create design systems, and facilitate activities with product, engineering, business, etcetera. So, as designers become able to automate processes to create UI for one or more use cases, they are now able to tap into other initiatives and work with other teams on initiatives that may have otherwise been tapped into onward or never. Imagine a moment where Next and Later features on the roadmap are finally at reach to validate!

While Dribbble UIs are a necessary evil that has helped numerous designers understand do from don't, we are at a unique crossroads to determine if we should let data drive our decision-making in creating interfaces. For every business looking to "streamline operations," there is an angry army of craftspersons waiting to revolt — for fellow designers it's a matter of addressing what a future looks like where designers can continue showing value across business domains and not being made obsolete by Big AI.

Even if we all took that leap of faith, bringing a new presentation of interface requires an architecture that automates and displays intelligence across all layers, reliably. If we can have a conversation about this use case for UI, who's to say we can't have the same conversation for component rendering, real-time integration testing, or reactionary querying?

I'm working on an awesome couple of projects that only scratch the surface of this space — stay tuned. Thanks for reading!◾