The Wild West of the United States AI regulation landscape

AI needs some deputies, or at least some concerned citizens.

2025's "Trump Era 2 - Electric Boogaloo" has clearly gotten off to a wild start, with government policy at the focal point of everything from wildfires to immigration. Swept under the radar was an executive order rescinded on AI policy — and there's clear sentiments over whether it should have been retained or removed. Conservatives will tell you that deregulation ripens the playing field for innovation, progressives will point you to the abuses and potential abuses of the technology itself. Both sides are equally right, and against each other are equally wrong.

Immediate state laws implemented this year have provided some sort of answer to a flame, but are root concern for a regulatory patchwork that businesses will struggle to navigate working across state lines. In times with heightened tensions across the globe, AI has become a critical focus for competing against superiority and advancement — the most recent example being China's Deepseek reasoning models outperforming anything from the US in terms of cost for capability. I would go as far as saying that technology is one of the United States' last competitive advantages, and this has been visibly validated in the amount of influence Big Tech has lately had through the new administration.

What are we peasants capable of

I have a simple proposal: we will need to find it in ourselves to either A) influence federal representatives to push forward legislation that creates alignment for AI systems, or B) find it in ourselves to work in the space of influencing and creating ethical technology.

I was one of the people who watched Silicon Valley and laughed at the idea of "Tethics", coming from the most unironic tech oligarch like Gavin Belson. And then I read it in detail, and slowly came to realize that is how every B2B SaaS startup founder in South San Francisco talks about their company values.

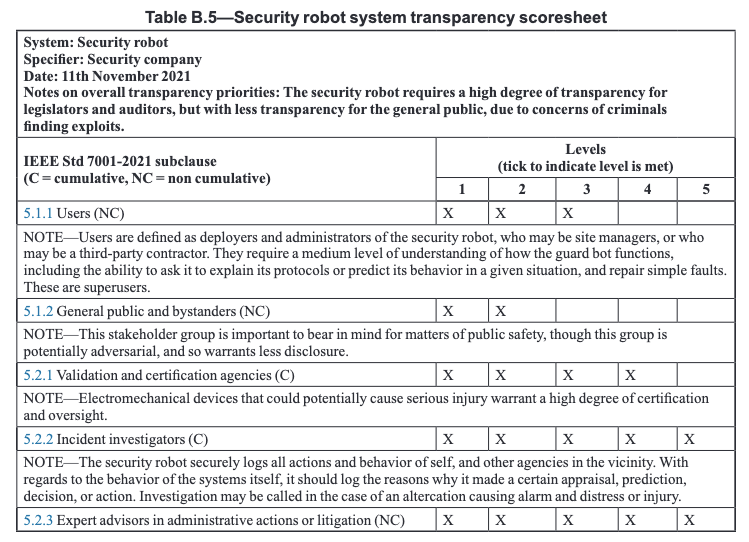

Of course there's no need to adopt Tethics 2.0 — we already have an abundance of frameworks and standards of building AI. Not just from our regulatory-crazed neighbors in the EU, from our academic partners in the US, too. The Institute of Electrical and Electronics Engineers (IEEE, aka the sound I make when brushing my teeth) has created a standards series surrounding AI Ethics and Governance. I believe all you need to access these as PDFs is to sign up with a free account and say that you go to some school. I am currently reading through the Standard for Transparency of Autonomous Systems and already noted some working models that could benefit Generative AI solutioning. There's considerations to how much, if any transparency, is required to keep users informed but safe from exploitation by other humans or systems.

To the topic of legislation, living in the US has its certain liberties. We tend to forget that those voted into legislation answer to those elected by them. There are many ways to speak to legislation about the risks and needs for guardrailing on AI. Personally, at the moment it's not an area I've been heavily involved in and I plan to update you when I have some more cohesive thoughts.

Making the climb

I may eat my own words but I do not think humans will be ignorant of consequences with both information and the capabilities of technology at their fingertips. The venture on AI is no cautionary tale for technology — for years we have been building ML and deep learning systems that have made their way into user-facing experiences. Just this week we are already seeing company leaders resist on anti-DEI language and commit to org values. It will be high-time for organizations and their leaders to truly execute AI with consideration to the messaging on their ethics and core values webpage.

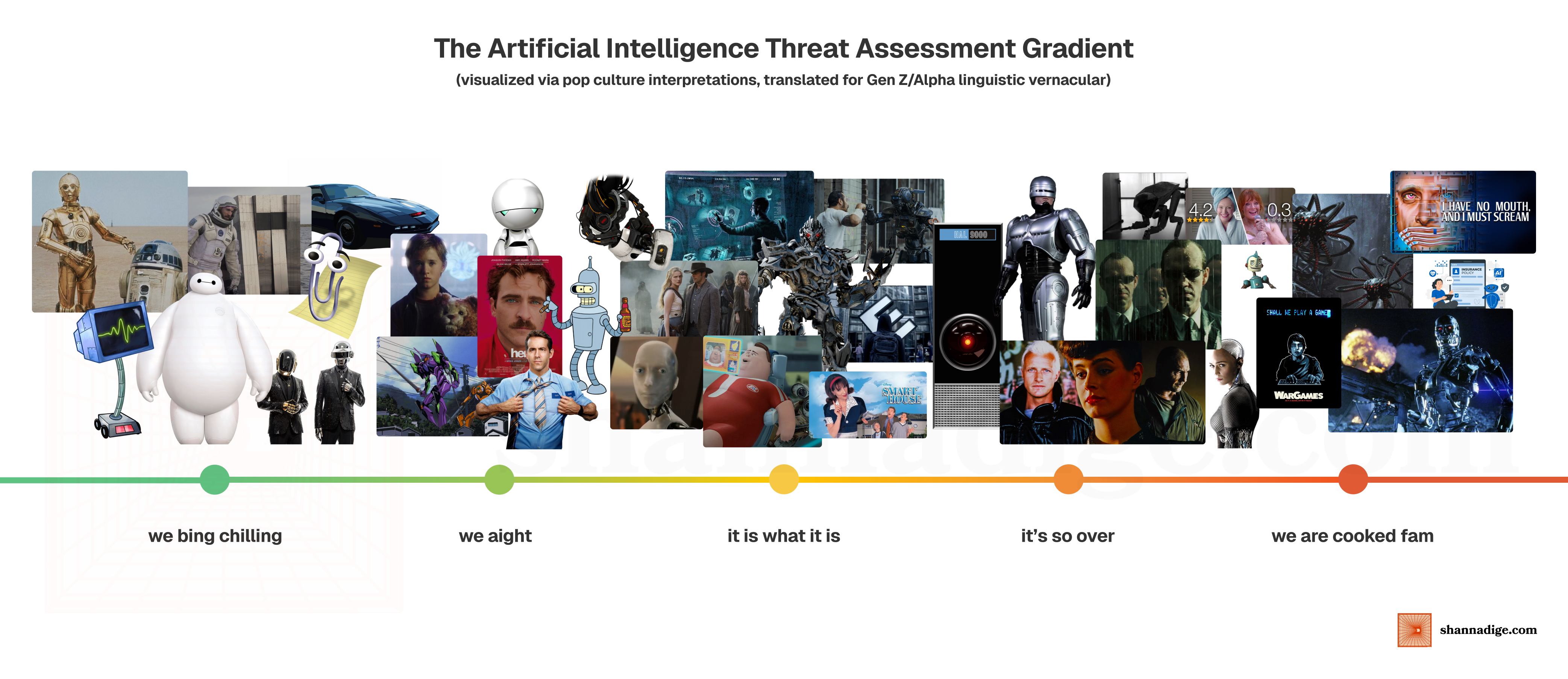

I've also had time to dwell on the worst-case scenarios. One thing I think is missing from modern publications is a scale that helps practitioners and engineers help classify the threat level of AI systems they build. Just in case, I translated it to a more understandable context for young audiences who may stumble upon this scale one day when it's too late.

If you've made it this far, you're probably someone who cares about responsible AI development. We can treat AI development as a competitive race to the top, or we can recognize that some challenges are too important for siloed thinking. What if we spent less time guarding our competitive advantages and more time sharing capabilities to create safe and ethical AI development? What if we measured success not just in quarterly profits, but in positive societal impact?

I know these questions might sound idealistic. Your leaders might dismiss them as naive or impractical. But remember – the most transformative changes often start with people who dare to ask uncomfortable questions. And if you're stuck with leaders who are absolutely hellbent on a vision that looks like this, please send me an email so I can offer you free counseling sessions. ■